One of the functions an organization must do is audit its computing infrastructure for compliance with best practices in security. Due to the complexity of modern computing systems, automated tooling can help identify what must be done to bring a system into compliance. Lynis is an open-source auditing and system hardening tool for Linux systems. It scans the system configuration and creates an overview of system information and security issues usable by system administrators to assist in automated audits.

Bring up the course VM. Within the VM, run the lynis command with the help flag to view the commands available for you to run with it.

lynis -hUsing lynis, run a local security scan on the VM. Navigate to the Lynis results section of the output. Scroll through the suggestions.

- Take a screenshot of the hardening index the security scan has produced

Copy the entire results section to the clipboard and continue to the next step.

While it might be useful to implement all of the suggestions, one might not have the time to do so. Prioritizing the suggestions might be helpful. Towards this end, bring up ChatGPT or Gemini. Use the prompt below to have the LLM produce the 5 most important suggestions to implement as a bulleted list, then paste the results section from your scan after the prompt.

You are a Linux system hardening expert. You've just run a Lynis system audit. Give a short bulleted list of the 5 most important suggestions to implement. The audit is below:

...- Take a screenshot of the suggestions to implement

In the same session with the LLM, ask for the 5 easiest suggestions to implement.

- Take a screenshot of the suggestions to implement

Then, perform each of the easiest suggestions and re-run the system audit.

- Take a screenshot of the hardening index the security scan has produced

Copy the results section of the security scan to the clipboard. Within the same LLM session prompt the LLM with the following.

I implemented the suggestions and got the following. Did the commands work?

...Answer the following:

- Would your results argue for or against using an LLM to automatically generate and execute system hardening commands?

Join the following room on TryHackMe: https://tryhackme.com/room/linuxserverforensics .

The room covers examining web server logs to discover traces of brute-force attacks, of malicious uploads, and of sensitive data exposure. It also examines a variety of ways adversaries can backdoor a system via creating cron jobs, cracking password hashes, inserting ssh keys, and discovering account credentials on the system in log, configuration, and history files. Complete the exercise.

- Take a screenshot showing completion of the room

Task #2: Note that curl is not considered a browser of its own in this exercise when counting tools in Task #2. One helpful command is below:

egrep -v "Mozilla|DirBuster" access.log

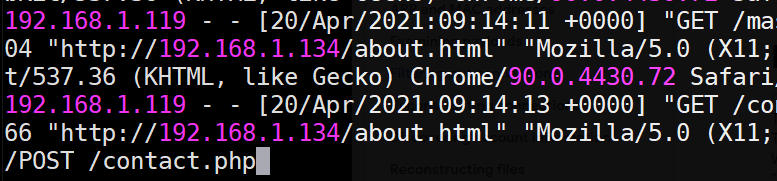

Task #3: Binary data in access.log makes it difficult to find the POST to contact.php. To do so, bring the file up with 'less', then search using the '/' character for "POST /contact.php" as shown below.

Another useful command for filtering out log entries so that only successful (200) GET requests are show is below:

egrep "DirB" /var/log/apache2/access.log | egrep "GET" | egrep " 200 "

Operating systems log each login in order to allow the administrator to track the activity on a system in case of any issues. On Linux, two utilities are often used to view prior logins: lastlog2 and last.

One can view last logins on a per-account basis using lastlog2. When run without parameters, it outputs the user's logins. However, one can also specify a specific username to examine with the -u flag. On your Kali VM, run the command.

lastlog2

- Take a screenshot showing your last logins

To get a raw list of the most recent logins, one can use the last command. Run the command below to view the last 10 login attempts on the machine.

last -n 10

- Take a screenshot showing the most recent logins

The commands reveal the IP address of the machine you have used to log into the VM. We can look up the geographic location of this IP address using any number of free APIs. One such API is IP-API. When given an IP address, it returns its country, city, region, latitude, and longitude in a JSON object. One can also send in specific parameters using the URL parameter fields to return specific information. Fill in your IP address in the commands below to demonstrate this.

curl http://ip-api.com/json/<FMI> curl http://ip-api.com/json/<FMI>?fields=city

- Take a screenshot showing the city returned by IP API.

As a system administrator, it might be useful to take a list of the most recent logins, pull out the IP addresses, and then perform a geographic lookup on each to detect anomalies. We will do so by incrementally using the tools we have covered so far in this codelab.

First, we'll use last to get the last logins and send its output to egrep to pull out all entries containing IP addresses in them using a regular expression that looks for 4 sets of integers separated by a period. From this output, we can then print the third field which contains the IP address using awk. Finally, we will sort the output and call uniq to remove duplicates to eliminate redundant lookups. Perform the commands below and view their output.

last | egrep " [0-9]+\.[0-9]+\.[0-9]+\.[0-9]+ "

last | egrep " [0-9]+\.[0-9]+\.[0-9]+\.[0-9]+ " | awk '{print $3}'

last | egrep " [0-9]+\.[0-9]+\.[0-9]+\.[0-9]+ " | awk '{print $3}' | sort | uniqGiven this list of IP addresses, we can send it as input to a loop that iteratively calls IP API to return the cities associated with each. For clarity, we'll assign the list of IP addresses to an environment variable using the backtick notation (` `), then echo the variable within the for loop in the shell.

IPS=`last | egrep " [0-9]+\.[0-9]+\.[0-9]+\.[0-9]+ " | awk '{print $3}' | sort | uniq`

for i in `echo $IPS`

do

curl http://ip-api.com/json/$i?fields=city

echo $i

done- Take a screenshot showing the cities returned. Are all of the logins from Portland?

Unfortunately, one can never trust log files directly since an adversary can modify them after obtaining access. Logs must be made tamper-resistant such as sending them off-machine as done in this infamous hacking case. Begin by examining the man page for the lastlog2 command. Scroll to the bottom of the man page.

- What file does it use to store its database times of previous user logins?

As it turns out, the system utilizes a sqlite3 database to store the last login per user. If the user has never logged in before, as is the case with most system accounts, the entry will be null. We can directly modify this database to remove our prior login. Bring up the database via the sqlite3 tool.

sudo sqlite3 <name_of_file>

Then, within the sqlite3 tool, list the tables that store the information.

.tables

Examine the schema of the table to find the names of columns in the database, noting the column name for the primary key that is the identifier for the login.

.schema <name_of_table>

Then execute a SQL statement to list all entries in the table.

select * from <name_of_table>;

Then, delete the most recent entry.

delete from <name_of_table> where <id_column>=<id>

Exit the sqlite3 tool via Ctrl+d.

Run lastlog2 to show that the system believes you have not logged in.

lastlog2 -u <username>

- Take a screenshot of the output.

The last command can also be easily tampered with. It uses a different database to store its data. Begin by examining the man page for the last command.

- What file does it use to store its database of user logins?

We will be modifying the file used for the last command. While we could wipe out all of its entries, it would be pretty clear to an administrator that the system was compromised. A sneakier thing to do would be to eliminate the last several login events from the file.

As before, the system utilizes a sqlite3 database to store the last logins. We can directly modify this database to remove prior logins. Bring up the database via the sqlite3 tool.

sudo sqlite3 <name_of_file>

Then, within the sqlite3 tool, list the tables that store the information.

.tables

Examine the schema of the table to find the names of columns in the database, noting the column name for the primary key that is the user's name.

.schema <name_of_table>

Then execute a SQL statement to list all entries in the table.

select * from <name_of_table>;

Then, delete the most recent entry (e.g. the one with the highest number)

delete from <name_of_table> where <id_column>=<id>

Exit the sqlite3 tool via Ctrl+d.

Run last to show that the most recent login has been deleted and that you are no longer "Logged in"

last

- Take a screenshot of the output.

By default, Linux keeps a finite amount of log data in /var/log. From a security perspective, it is important to understand the time frames log data is collected and stored. On Linux systems, the logrotate command manages the automated rotation of log data on a machine. An administrator can configure a policy on a per log basis to control its rotation.

On the Kali VM, begin by listing the log files for failed logins and for authentication attempts.

ls -l /var/log/btmp* /var/log/auth.log*

As the listing shows, the logs have different policies that control their rotation. One of the two uses settings that map to the default rotation policy for logrotate while the other has a custom policy. The policies differ in terms of the frequency logs are rotated, the number of old versions to keep, and whether or not to use compression. Perform a man on logrotate to find the file that configures the options used for log rotation. Then, examine the file and answer the following questions:

- What is the frequency of rotation and the number of backlogs kept by default?

- How old does a logged event have to be before it is forgotten by default?

The configuration file also includes a directory where custom log rotation policies are stored that override the default. The files are typically named after the log file they control. Find the configuration for the log that has a custom policy defined.

- What is the frequency of rotation and the number of backlogs kept in this policy?

- How old does a logged event have to be before it is forgotten in this policy?

Join the following room on TryHackMe: https://tryhackme.com/room/introtosiem .

The room covers the visibility and analysis of events throughout an enterprise and how modern tools are used to detect and react to suspicious events across an entire network. Complete the exercise.

- Take a screenshot showing completion of the room