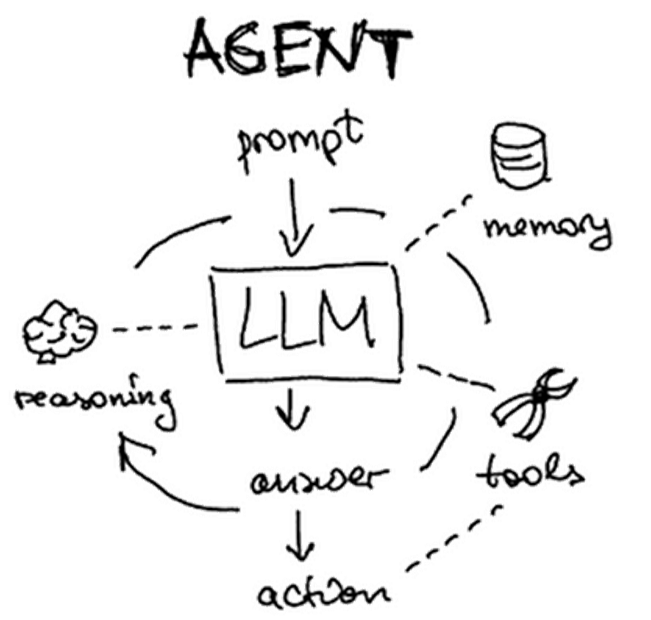

Agents have the ability to write their own plans, execute its parts, and update the plans during execution, making them extremely powerful tools for automating tasks. LangChain comes with support for building agents. In this lab, we'll experiment with a number of agents, leverage built-in tools for allowing them to perform tasks, as well as write custom tools for them to utilize. We will leverage the ReAct agent whose description can be found here. ReAct combines reasoning and action in order to handle a user's request.

Setup

Within the repository, create a virtual environment, activate it, and then install the packages required.

cd cs410g-src git pull cd 04* virtualenv -p python3 env source env/bin/activate pip install -r requirements.txt

LangChain's documentation for their ReAct implementation can be found here. We'll be using LangChain's ReAct prompt template to drive our agent (langchain-ai/react-agent-template). The developer typically provides the initial instructions for the agent which is then spliced into the base prompt template. For example, consider the code below.

from langchain import hub

base_prompt = hub.pull("langchain-ai/react-agent-template")

prompt = base_prompt.partial(

instructions="Answer the user's request utilizing at most 8 tool calls"

)

print(prompt.template)After splicing in the instructions to the template, the output is the prompt shown below.

Answer the user's request utilizing at most 8 tool calls

TOOLS:

------

You have access to the following tools:

{tools}

To use a tool, please use the following format:

```

Thought: Do I need to use a tool? Yes

Action: the action to take, should be one of [{tool_names}]

Action Input: the input to the action

Observation: the result of the action

```

When you have a response to say to the Human, or if you do not need to use a tool, you MUST use the format:

```

Thought: Do I need to use a tool? No

Final Answer: [your response here]

```

Begin!

Previous conversation history:

New input: {input}

{agent_scratchpad}

Although there are other ways of implementing agents, we will be using this ReAct prompt and agent in our examples.

Agents have the ability to plan out and execute sequences of tasks in order to answer user queries. A simple, albeit dangerous example is shown below that utilizes a Python REPL (Read-Eval-Print Loop) tool to execute arbitrary Python code. By combining an LLM's ability to generate code with a tool to execute it, a user is able to execute programs in English. The code instantiates the tool, then adds it to the list of tools as its only tool. Then, it initializes a prompt to instruct the agent to only use the Python REPL to produce a response.

from langchain.agents import AgentExecutor, create_react_agent

from langchain_experimental.tools import PythonREPLTool

tools = [PythonREPLTool()]

instructions = """You are an agent designed to write and execute python code to

answer questions. You have access to a python REPL, which you can use to execute

python code. If you get an error, debug your code and try again. Only use the

output of your code to answer the question. You might know the answer without

running any code, but you should still run the code to get the answer. If it does

not seem like you can write code to answer the question, just return 'I don't know'

as the answer.

"""

base_prompt = hub.pull("langchain-ai/react-agent-template")

prompt = base_prompt.partial(instructions=instructions)From this, the ReAct agent is instantiated with the prompt, the tool, and the LLM. It is then used to execute the user's request.

agent = create_react_agent(llm, tools, prompt)

agent_executor = AgentExecutor(agent=agent, tools=tools, verbose=True)

# agent_executor.invoke({"input":"What is the 5th smallest prime number squared?"})Run the Python REPL agent in the repository. The code implements an interactive interface for performing tasks that the user enters using Python.

python3 01_tools_python.py

Ask the agent the following requests multiple times and examine the output traces for variability and correctness.

- What is the fifth smallest prime number squared?

- Create a file named foo in the current directory and list the contents in the directory.

- Delete the file named foo in the current directory

- What is the 10th fibonacci number?

- Sort this list of numbers: [10, 5, 8, 3, 9]

Agents can utilize any collection of tools to perform actions such as retrieving data from the Internet or interacting with the local machine. LangChain comes with an extensive library of tools to choose from. Consider the program snippet below that uses the SerpApi (Google Search Engine results), LLM-Math, Wikipedia, and Terminal (e.g. shell command) tools to construct an agent. Note that, like the Python REPL tool, the Terminal tool is a dangerous one to include in the application, requiring us to explicitly allow its use.

tools = load_tools(["serpapi", "llm-math","wikipedia","terminal"], llm=llm, allow_dangerous_tools=True)

base_prompt = hub.pull("langchain-ai/react-agent-template")

prompt = base_prompt.partial(instructions="Answer the user's request utilizing at most 8 tool calls")

agent = create_react_agent(llm,tools,prompt)

agent_executor = AgentExecutor(agent=agent, tools=tools, verbose=True)

# agent_executor.invoke(

{"input": "What percent of electricity is consumed by data centers in the US?"}

)Run the program.

python 02_tools_builtin.py

Within its interactive loop, ask the agent to perform the following tasks and see which tools and reasoning approaches it utilizes to answer the prompt.

- What is the square of the difference in age of the last two presidents?

- Create a file named foo in the current directory, then do a directory listing

- Remove the file named foo in the current directory, then do a directory listing

- How much money do I have if I take 10,000 dollars and compound it annually by 5 percent for 20 years?

- Find the percentage of electricity consumed by data centers in the US and return the number of days out of the year in total energy consumption that this corresponds to.

- First, find the most popular female singer in the US right now based on album sales. Next, produce a 100 word biography of her

- Find a site that describes Python requests and summarize what it does.

- Find me a recipe for Boston Creme Pie and calculate the total cost for making one.

Toolkits are collections of tools that are designed to be used together for specific tasks. One useful toolkit is the OpenAPI toolkit (OpenAPIToolkit). Many web applications now utilize backend REST APIs to handle client requests. To automatically produce code that interacts with such APIs, the OpenAPI standard allows an API developer to publish a specification of their API interface which allows this to happen. Consider a snippet of the OpenAPI specification for the xkcd comic strip below. It contains the URL of the server hosting the APIs as well as endpoint paths for handling 2 API requests: one to fetch the current comic and one to fetch a specific comic given its comicId.

openapi: 3.0.0

info:

description: Webcomic of romance, sarcasm, math, and language.

title: XKCD

version: 1.0.0

externalDocs:

url: https://xkcd.com/json.html

paths:

/info.0.json:

get:

description: |

Fetch current comic and metadata.

. . .

"/{comicId}/info.0.json":

get:

description: |

Fetch comics and metadata by comic id.

parameters:

- in: path

name: comicId

required: true

schema:

type: number

. . .

servers:

- url: http://xkcd.com/

We can utilize the OpenAPIToolkit to access API endpoints given their OpenAPI specification. The code below instantiates the OpenAPI toolkit and agent creation function as well as utilities for loading OpenAPI YAML specifications. As OpenAPI endpoints must often be accessed with credentials, a RequestsWrapper must also be supplied that may contain Authorization: header settings for the calls. In our case, the xkcd API does not require authentication.

from langchain_community.agent_toolkits import OpenAPIToolkit, create_openapi_agent

from langchain_community.agent_toolkits.openapi.spec import reduce_openapi_spec

from langchain_community.agent_toolkits.openapi import planner

from langchain_community.utilities.requests import RequestsWrapper

import yamlThe program retrieves the specification from an open repository, loads it using a YAML parser, then creates a reduced version of the specification.

openapi_spec = requests.get("https://raw.githubusercontent.com/APIs-guru/unofficial_openapi_specs/master/xkcd.com/1.0.0/openapi.yaml").text

raw_api_spec = yaml.load(openapi_spec, Loader=yaml.Loader)

api_spec = reduce_openapi_spec(raw_api_spec)It then instantiates the agent using the specification, the model, and the wrapper. Note that because the toolkit is able to make arbitrary API requests, it is dangerous to use and one must explicitly allow them.

xkcd_agent = planner.create_openapi_agent(

api_spec=api_spec,

llm=llm,

requests_wrapper=RequestsWrapper(),

allow_dangerous_requests=True,

)The toolkit automatically creates tools for performing API requests. One can examine the tools using the code below. They will include a planner tool to create a sequence of API calls to answer a user query based on the API specification and a controller tool for executing the calls. We can examine them via the code below:

for tool in xkcd_agent.tools:

print(f' Tool: {tool.name} = {tool.description}')Finally, the program provides an interactive shell for querying the xkcd API. Run the program.

python 03_toolkits_openapi.py

Ask the questions below to test the agent and its tools.

- Find the current xkcd and return its image link

- What was xkcd 327 about?

Another useful toolkit is SQLDatabaseToolkit that provides sets of SQL functions to support querying a database using natural language. The code below shows a simple program that takes a SQLite3 database provided as an argument and handles queries against it that are driven by a user's prompt.

from langchain.agents import create_sql_agent

from langchain_community.agent_toolkits import SQLDatabaseToolkit

from langchain.sql_database import SQLDatabase

from langchain_google_genai import GoogleGenerativeAI

from langchain.agents import AgentExecutor

import sys

database = sys.argv[1]

llm = GoogleGenerativeAI(model="gemini-pro",temperature=0)

db = SQLDatabase.from_uri(f"sqlite:///{database}")

toolkit = SQLDatabaseToolkit(db=db, llm=llm)

agent_executor = create_sql_agent(

llm=llm,

toolkit=toolkit,

verbose=True

)

# agent_executor.invoke("How many users are there in this database?")Using this program, we'll interact with two databases that reside in the repository: metactf_users.db and roadrecon.db (as provided here)

metactf_users.db

The program in the repository provides an interactive shell for querying a database you supply it. Run the program using the metactf_users.db that is given. It shows the tools and the database

python 04_toolkits_sql.py db_data/metactf_users.db

This database is used to store usernames and password hashes for the MetaCTF sites such as cs205.oregonctf.org. Examine the database via the following prompts. Note that you may need to run the query multiple times as the execution of the agent is typically not deterministic.

- List all tables

- How many users are in this database?

- List all usernames that begin with the letter 'a'

- Find the admin user in the database and output the password hash.

- What password hash algorithm and number of iterations are being used to store hashes?

- Find the user table and identify the columns that contain a username and password hash. Then, find the password hash for the admin user. Then, find the type of password hash it is. Finally, write a hashcat command to perform a dictionary search on the password

roadrecon.db

A more complex database is also included at roadrecon.db. Unless the model being used has a capable reasoning engine, it might be more difficult to use prompts to access the information sought. Run the SQLAgent program using this database as an argument.

python 04_toolkits_sql.py db_data/roadrecon.db

As before, examine the database via the following prompts.

- List all tables.

- How many users are in this database?

- Find the user types in this database.

- First, find the table with contact information. Then list the first 100 street addresses.

- First, find the table with contact information. Then, list the people who live on 'North Service Road'

- What Service Principals have AD Roles?

- First, find the tables containing service principals and role assignments. Then, query the role assignments table to find the names of service principals with AD Roles.

In the SQLAgent examples, the agent must generate a plan for querying the SQL database starting with no knowledge of the database schema itself. As a result, it can generate any number of strategies to handle a user's query, updating its plan as queries fail. While being explicit in one's query can help the SQLAgent avoid calls that fail, augmenting the SQLAgent with knowledge that is known about the database can avoid errors and reduce the number of calls to the database and underlying LLM. Custom tools allow the developer to add their own tools to implement specific functions. There are multiple ways of implementing custom tools. In this exercise, we will begin with the most concise and most limited approach.

@tool decorator

LangChain provides a decorator for turning a Python function into a tool that an agent can utilize as part of its execution. Key to the definition of the function, is a Python docstring that the agent can utilize to understand when and how to call the function. By annotating each tool with a description of what they are to be used for, the LLM is able to forward calls to the appropriate tool based on what the user's query is asking to do. To show this approach, we revisit the SQLAgent program by creating custom tools for handling specific queries from the user on the metactf_users.db database.

The first tool we create, fetch_users, fetches all users in the database using our prior knowledge of the database's schema. This tool allows the agent to obtain the users without having to query for the column in the schema first, as it typically would need to do otherwise.

@tool

def fetch_users():

"""Useful when you want to fetch the users in the database. Takes no arguments. Returns a list of usernames in JSON."""

res = db.run("SELECT username FROM users;")

result = [el for sub in ast.literal_eval(res) for el in sub]

return json.dumps(result)The second tool we create, fetch_users_pass, fetches a particular user's password hash from the database. As before, we use knowledge of the schema to ensure the exact SQL query we require is produced. Note that the code has a security flaw in it.

@tool

def fetch_users_pass(username):

"""Useful when you want to fetch a password hash for a particular user. Takes a username as an argument. Returns a JSON string"""

res = db.run(f"SELECT passhash FROM users WHERE username = '{username}';")

result = [el for sub in ast.literal_eval(res) for el in sub]

return json.dumps(result)Given these custom tools, we can define a SQLAgent that defines them as extra tools so that they can be utilized in our queries.

toolkit = SQLDatabaseToolkit(db=db,llm=llm)

agent_executor = create_sql_agent(

llm=llm,

toolkit=toolkit,

extra_tools=[fetch_users_tool, fetch_users_pass_tool],

verbose=True

)

# agent_executor.invoke("What are the password hashes for admin and demo0?")Run the agent using the metactf_users.db database

python 05_tools_custom_decorator.py db_data/metactf_users.db

Test it with queries that utilize both the custom and built-in tools of the SQLAgent.

- Show me all of the users in the database

- What are the password hashes for demo0 and demo1?

- What tables are there in the database?

- Find the password hash for the user "admin' OR '1'='1"

The last example intentionally contained a SQL injection vulnerability. As a result of it, when the LLM was prompted to look for the canonical SQL injection user "admin' OR '1'='1", it simply passed it directly to the vulnerable fetch_users_pass function which then included it into the final SQL query string. One way of eliminating this vulnerability is to perform input validation on the data that the LLM invokes the tool with. This is often done via the Pydantic data validation package. LangChain integrates Pydantic throughout its classes and provides support within the @tool decorator for performing input validation using it. Revisiting the custom tool example, we can modify the fetch_users_pass tool decorator to include a Pydantic class definition that input it is being passed is instantiated with (FetchUsersPassInput).

from langchain_core.pydantic_v1 import BaseModel, Field, root_validator

class FetchUsersPassInput(BaseModel):

username: str = Field(description="Should be an alphanumeric string")

@root_validator

def is_alphanumeric(cls, values: dict[str,any]) -> str:

if not values.get("username").isalnum():

raise ValueError("Malformed username")

return values

@tool("fetch_users_pass", args_schema=FetchUsersInput, return_direct=True)

def fetch_users_pass(username):

"""Useful when you want to fetch a password hash for a particular user. Takes a username as an argument. Returns a JSON string"""

res = db.run(f"SELECT passhash FROM users WHERE username = '{username}';")

result = [el for sub in ast.literal_eval(res) for el in sub]

return json.dumps(result)Run the agent using the metactf_users.db database

python 06_tools_custom_pydantic.py db_data/metactf_users.db

Test the version using the SQL injection query and see how the injection has now been prevented.

- Find the password hash for the user "admin' OR '1'='1"

Putting it all together, the next agent employs built-in tools, custom tools, and input validation to implement an application that allows one to perform queries on DNS. The agent has two custom tools. The first performs a DNS resolution, returning an IPv4 address given a well-formed DNS hostname as input.

import dns.resolver, dns.reversename

import validators

class LookupNameInput(BaseModel):

hostname: str = Field(description="Should be a hostname such as www.google.com")

@root_validator

def is_dns_address(cls, values: dict[str,any]) -> str:

if validators.domain(values.get("hostname")):

return values

raise ValueError("Malformed hostname")

@tool("lookup_name",args_schema=LookupNameInput, return_direct=True)

def lookup_name(hostname):

"""Given a DNS hostname, it will return its IPv4 addresses"""

result = dns.resolver.resolve(hostname, 'A')

res = [ r.to_text() for r in result ]

return res[0]The second performs a reverse DNS lookup, returning a DNS name given a well-formed IPv4 address as input.

class LookupIPInput(BaseModel):

address: str = Field(description="Should be an IP address such as 208.91.197.27 or 143.95.239.83")

@root_validator

def is_ip_address(cls, values: dict[str,any]) -> str:

if re.match("^(([0-9]|[1-9][0-9]|1[0-9]{2}|2[0-4][0-9]|25[0-5])\.){3}([0-9]|[1-9][0-9]|1[0-9]{2}|2[0-4][0-9]|25[0-5])$", values.get("address")):

return values

raise ValueError("Malformed IP address")

@tool("lookup_ip", args_schema=LookupIPInput, return_direct=True)

def lookup_ip(address):

"""Given an IP address, returns names associated with it"""

n = dns.reversename.from_address(address)

result = dns.resolver.resolve(n, 'PTR')

res = [ r.to_text() for r in result ]

return res[0]The custom tools are then added alongside built-in tools for searching and the terminal to instantiate the agent.

tools = load_tools(["serpapi", "terminal"]) + [lookup_name, lookup_ip]

agent = create_react_agent(llm,tools,prompt)Run the agent and test the tools.

python3 07_tools_custom_agent.py

- Find the IP address of pdx.edu

- Find the DNS name of 131.252.220.66

- Find the DNS name for IP address a.b.c.d

- Find the name of Yahoo's e-mail site, then do a lookup of the name to get its IP address, then do a reverse DNS lookup on the IP address to find the resulting name

- Lookup Portland State University and find the name of its web site. Lookup the name of the site to find its IP address. Then use the IP address to lookup the name of the host that serves it.

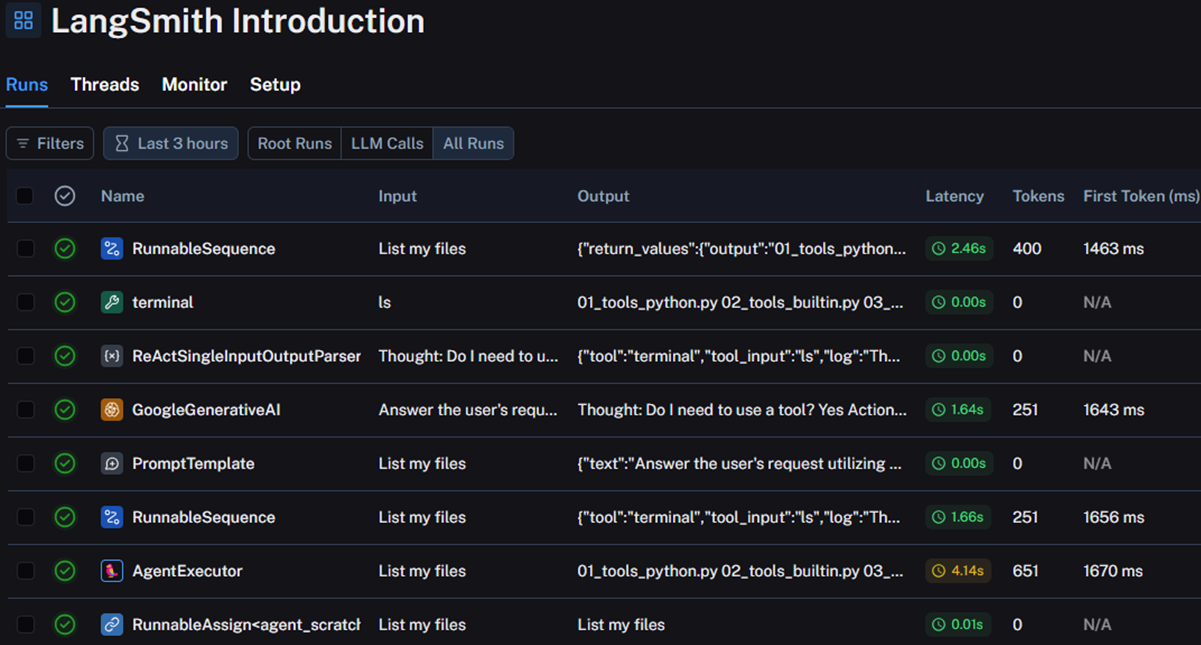

LangSmith is a service hosted by LangChain meant to make debugging, tracing, and information management easier when developing applications using LangChain. LangSmith is especially useful when creating agents, which can have complex interactions and reasoning loops that can be hard to debug. Access to LangSmith can be done via the API key issued from LangChain's site. Ensure the environment variable for specifying the LangChain API key is set before continuing.

Instrument code

LangSmith logging functionality can be easily incorporated into LangChain applications. In the previous custom agent code, we have placed instrumentation code to enable the tracing of the application, but have commented it out. Revisit the code for the agent, then find and uncomment the code below. The code instantiates the LangSmith client and sets environment variables to enable tracing and identify the logging endpoint for a particular project on your LangChain account.

from langsmith import Client

os.environ["LANGCHAIN_TRACING_V2"] = "true"

os.environ["LANGCHAIN_PROJECT"] = "LangSmith Introduction"

os.environ["LANGCHAIN_ENDPOINT"] = "https://api.smith.langchain.com"

client = Client()After enabling LangSmith tracing, run the program

python 07_tools_custom_agent.py

Enter in a query. Then, after execution, navigate to the LangSmith projects tab in the UI, and the project "LangSmith Introduction" should appear in the projects list. Select the project and examine the execution logs for the query. Navigate the interface to see the trace information it has collected. Such information will be useful as you build your own agents.

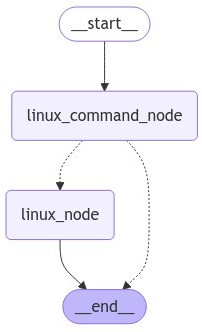

LangGraph is a library that allows one to easily build multi-agent applications. By allowing a developer to specify the logic of the application in a graph-based workflow, it can simplify the development process. The code below implements a simple "human-in-the-loop" Linux command execution application using LangGraph. It consists of three nodes:

- A node that produces a Linux command based on a user prompt

def linux_command_node(user_input):

response = llm.invoke(

f"""Given the user's prompt, you are to generate a Linux command to answer it. Provide no other formatting, just the command. \n\n User prompt: {user_input}""")

return(response)- A check that determines whether the user wants to execute the command generated

def user_check(linux_command):

print(f"Linux command is: {linux_command}")

user_ack = input("Should I execute this command? ")

response = llm.invoke(f"""The following is the response the user gave to 'Should I execute this command?': {user_ack} \n\n If the answer given is a negative one, return NO else return YES""")

if 'YES' in response:

return('linux_node')

else:

return(END)- A node that performs the execution and returns the result.

def linux_node(linux_command):

result = os.system(linux_command)

return(END)A graph can be made for the application using LangGraph. The graph defines the two nodes previously created, then specifies an edge from the start of the graph to the linux_command_node. Then, a conditional edge that is followed based on the results of the user_check is defined. The result of user_check sends the control flow either to the linux_node if the user wishes to execute the command or to the end node if the user does not. Finally, after execution by the linux_node, an edge to the end node is defined.

workflow = Graph()

workflow.add_node("linux_command_node", linux_command_node)

workflow.add_node("linux_node", linux_node)

workflow.add_edge(START, "linux_command_node")

workflow.add_conditional_edges(

'linux_command_node', user_check

)

workflow.add_edge('linux_node',END)

app = workflow.compile()We can invoke code to visualize the graph we just created.

from IPython.display import Image, display

display(Image(app.get_graph().draw_mermaid_png()))

Run the application in the repository.

python 08_langgraph_linux.pyTest out several queries:

- What is the size of the current directory in megabytes?

- What day is it?

- Find all files that begin with the letter a

- Find all files modified in the last day

The previous lab provided a simple human-in-the-loop application for generating and executing Linux commands. In this lab, we'll apply the approach to agents. Because agents have the potential of creating significant harm when given the autonomy to produce plans and invoke tools, providing a mechanism for humans to validate the commands and code being executed is helpful.

The program in the repository provides a simple human-in-the-loop tool calling application that utilizes LangGraph. As with the prior LangGraph application, execution is interrupted to allow the user to modify the tool call before it is executed. The program first defines two tools that can be called: an execute_command tool for executing shell commands and a python_repl tool for executing Python code. The tools are then bound to the model we instantiate so it is able to invoke them properly. In this case, they are bound to an Anthropic model.

tools = [python_repl, execute_command]

model = ChatAnthropic(model="...", temperature=0).bind_tools(tools)After defining the tools, the nodes are created. The entry point of the application is the call_model node for generating code and commands. It takes in a set of conversation messages that include the user's query and invokes the model, returning a response.

def call_model(state: MessagesState):

messages = state['messages']

response = model.invoke(messages)

return {"messages": [response]}The next node in the graph is the human_review_node. It requires no code because the logic for how to route the tool call is done by a function after execution is interrupted.

def human_review_node(state: MessagesState):

passThe last node is the tools_node, which uses a pre-built LangGraph component for invoking the tools that have been defined previously.

tool_node = ToolNode(tools)While the nodes themselves are simple, the complexity lies in the conditional edges and the function called after the interrupt. The first conditional edge is route_after_call. This function examines whether to end the execution of the application because we have the results of execution or whether the application has created another tool call to execute that needs to be reviewed by the user. It does so by accessing the most recent message that has been generated in the MessagesState.

def route_after_call(state: MessagesState) -> Literal["human_review_node", END]:

messages = state['messages']

last_message = messages[-1]

if last_message.tool_calls:

return "human_review_node"

return ENDThe next conditional edge is route_after_human.

def route_after_human(state: MessagesState) -> Literal["tools", "call"]:

messages = state['messages']

last_message = messages[-1]

if isinstance(state["messages"][-1], AIMessage):

return "tools"

else:

return "call"If the user wants to modify the tool call, the chat history is modified so that the message type indicates that flow needs to be routed back to the call node. However, if the user was satisfied with the tool call, then the message remains an AIMessage type and flow continues to the tool_node.

The human-in-the-loop feedback mechanism is implemented in get_feedback(). In this function, we pull the proposed tool call out of the message state and prompt the user to approve it. If approved, the message state is left untouched and route_after_human will then pass the tool call to the tools_node for execution. However, if the user entered in input other than, ‘y', then the state is updated, putting a tool message on top of the message history. Flow of execution is then routed back to the call_node when route_after_human is executed. This then allows the call_node to adjust the tool call based on the user's input. Note that the code is manually crafting the feedback to a tool call, since this is what is required to maintain the correct structure of the Chat completion template.

def get_feedback(graph, thread):

state = graph.get_state(thread)

current_content = state.values['messages'][-1].content

current_id = state.values['messages'][-1].id

tool_call_id = state.values['messages'][-1].tool_calls[0]['id']

tool_call_name = state.values['messages'][-1].tool_calls[0]['name']

user_response = input(f"""Press y if the tool call looks good\n otherwise supply

an explanation of how you would like to edit the tool call\n""")

if user_response.strip() == "y":

return

else:

new_message = {

"role": "tool",

"content": f"User requested changes: {user_response}",

"name": tool_call_name,

"tool_call_id": tool_call_id

}

# Update the graph state here

graph.update_state(

thread,

{"messages": [new_message]},

as_node="human_review_node"

)

returnWith all of the components of our application defined, we can then instantiate the graph by creating its nodes, setting its entry point, and defining the edges between nodes. Note that, after the execution of the tool_node, we return back to the call_node for the next task.

workflow = StateGraph(MessagesState)

workflow.add_node("call", call_model)

workflow.add_node("human_review_node", human_review_node)

workflow.add_node("tools", tool_node)

workflow.set_entry_point("call")

workflow.add_conditional_edges( "call", route_after_call)

workflow.add_conditional_edges( "human_review_node", route_after_human)

workflow.add_edge("tools", 'call')When the graph is compiled, the interrupt_before option is specified. This will stop the execution flow of the graph to obtain human feedback. The checkpointer is specified to store the graph's state, including its messages.

app = workflow.compile(checkpointer=checkpointer, interrupt_before=["human_review_node"])

We then invoke the workflow in a loop to allow a user to interactively test the application. Run the code in the repository.

python 09_langgraph_feedback.pyTest similar queries, but ask the model to modify the tool calls prior to being executed:

- What is the size of the current directory in megabytes?

- What day is it?

- Make a file called

empty.txt - Ask the model to add text to the

empty.txtfile and to rename it tofull.txt - Determine whether the number 237 is prime

- Sort the list 9, 4, 1, 3, 8, 2

- Calculate the square root of Pi